Speaker

Description

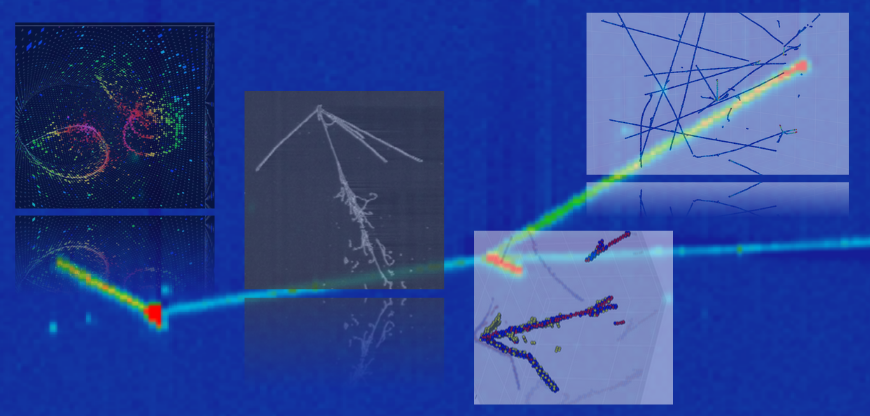

This report presents a neural network–based approach for the Baikal-GVD experiment to separate neutrino-induced events from background events caused by extensive air showers (EAS). Two Transformer encoder models were developed for different stages of the data processing pipeline. The first, trained on Monte Carlo simulated raw optical module hits with spatial, temporal, and charge information, serves as a fast pre-filter. At a fixed neutrino exposure of 99%, it achieves EAS suppression factors from 2.6 (≥5 hits on two strings) up to 17 (≥16 hits on two strings). The second model, trained on noise-filtered signal hits, is designed for the final processing stages, where it preserves 64% of neutrino-induced events while achieving a background suppression factor of 10^6, making it suitable for estimating the integral neutrino flux. To improve applicability to experimental data, we consider domain-adversarial training with a gradient reversal layer, enabling the networks to learn simulation-independent features.