Speaker

Description

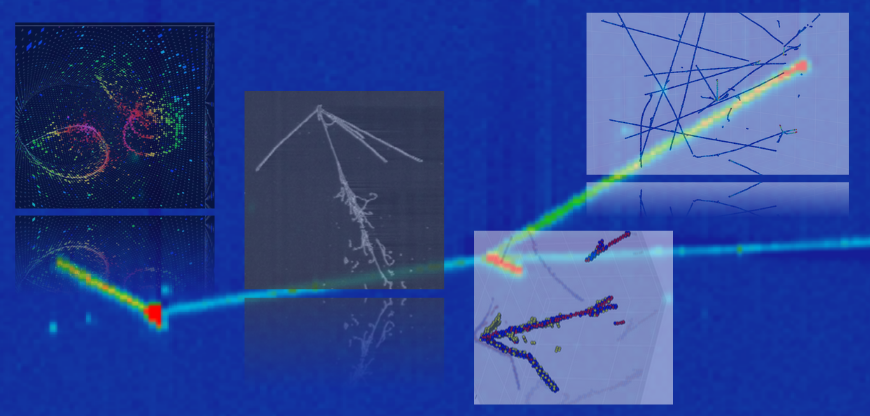

The FASERCal detector, proposed as an off-axis neutrino detector for the FASER experiment to operate during LHC Run 4, will produce sparse, 3D voxelized data, demanding advanced deep learning for neutrino event reconstruction. We present a hybrid architecture that uses a Sparse Submanifold Convolutional Network (SSCN) to efficiently tokenize voxel hits into patch embeddings. These are processed by a hierarchical Transformer encoder with attention mechanisms designed to capture features at multiple spatial scales, learning both fine-grained local shower structures and the global event topology. The model is trained using a two-stage transfer learning approach. A self-supervised pre-training phase employs a dual-objective Masked Autoencoder (MAE) to learn a robust physical representation via both generative reconstruction and contrastive learning. The resulting encoder is then fine-tuned for multi-task classification and kinematic regression using task-specific cross-attention heads. This framework achieves high-fidelity reconstruction on simulated data, showing stable performance and first signs of sensitivity to $\nu_{\tau}$ CC interactions and charm production in neutrino events.