Speaker

Description

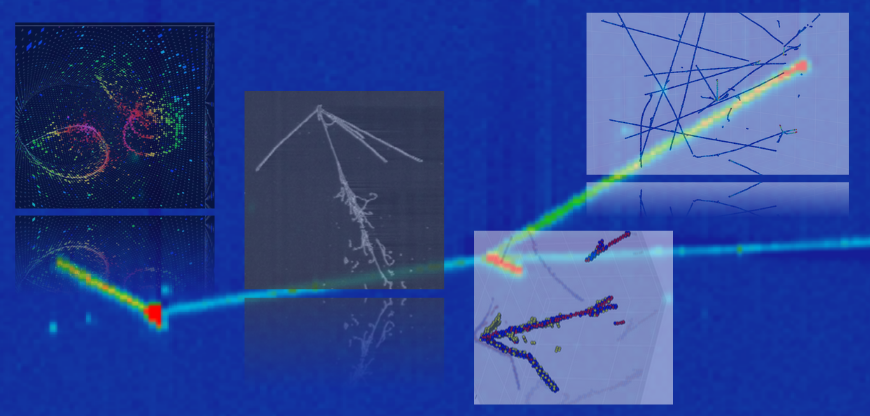

We present what to our knowledge is the first sensor-level foundation model (FM) for neutrino detectors, trained directly on simulated 3D LArTPC charge data without manual labels. The goal is simple: build a scalable model that learns the underlying physics automatically from data, then adapts to event reconstruction, PID, calibration, and other tasks using only a small labelled sample. I will discuss two complementary self-supervised approaches. The first is masked autoencoding, which forces the model to internalize detector response and interaction structure by reconstructing randomly masked portions of particle trajectories. The second is self-distillation, a teacher-student training setup that builds robust representational invariances with data-corrupting augmentations. We share what makes these approaches work well with LArTPC data, and show that on downstream tasks, our model delivers large sample-efficiency gains; for example, fine-tuning on just 100 example images results in >98% F1 score in segmenting tracks and showers. The net effect is an extensible physics-aware backbone for LArTPC data.