AI/ML Data Challenge:

It is a (semi-)virtual event that calls for public participation to solve a research challenge. We will cast physics research challenges into AI/ML tasks and promote AI/ML research for the neutrino physics community. A data challenge consists of five key elements:

-

Question … the core research question to be answered

- e.g. "Can we resolve the many (~20) neutrino "pile-up" in the LArTPC at the DUNE near detector?"

-

Impact … the research impact brought by addressing the challenge

- e.g. "Necessary to enable physics programs at the DUNE ner detector."

-

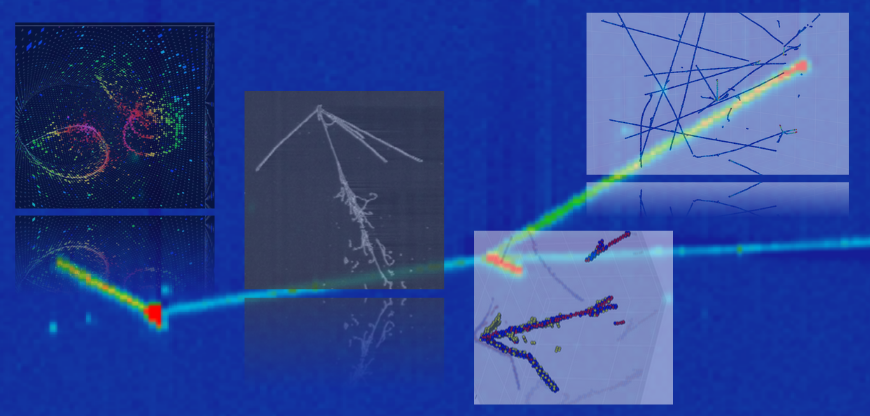

Datasets … accessible, curated data designed for an AI task

- e.g. Simulated datasets from the TPCs (3D scene of particle trajectories) and optical signal (digitized waveform from optical detectors)

-

Task … what AI/ML models are supposed to perform on the datasets to bring the impact

- e.g. Panoptic segmentation at three levels: semantic, particle instance, and interaction instance

-

Metrics … measures the success of the submitted AI/ML model solutions

- e.g. ARI for clustering performance at three levels defined for the task

One data challenge is expected to last about 6 months after datasets are released. During this period, participants may develop AI/ML models and submit solutions for evaluation. We plan to maintain a leaderboard.

AI/ML Data Olumpic:

It is an in-person event that takes place approximately every 6 months and hosts the closure of the on-going and opening of the new data challenges. At the closure, we announce the winner of the challenge and invite them to present their AI/ML models. New data challenges that are ready to start will be also announced. A short (1 or 2 days) workshop will be also hosted to make the new data challenges accessible.